Blog Speed Optimization

15 July, 2021

Motivation

One of my favourite pastimes is running various performance analyzers against this blog in hopes of getting a good score. Usually i do pretty well, mostly on the account of this blog being completely static.

For reference, let’s compare some internet avarages for various performance metrics and see how they stack up against my blog. This page is used for the benchmarks. Averages are from HTTP-Archives, and performance data for this blog was gathered using Google Lighthouse.

Disclaimer: We are comparing apples to oranges, as most websites aren’t (and cannot) be static, but this was the best source of data I could find, so I’m using it regardless.

Desktop

| Category | Avarage | My blog |

|---|---|---|

| Page size | 2.1 MB (2124 KB) | 0.42 MB (50.6 KB) |

| Total requests | 73 | 21 |

| FCP | 2.1s | 0.7s |

Mobile

| Category | Avarage | My blog |

|---|---|---|

| Page size | 1.9 MB (1914 KB) | 0.42 MB (52 KB) |

| Total requests | 69 | 21 |

| FCP | 5.2s | 2.3s |

Optimizing the site

Google Pagespeed was used to identify the most outstanding problems. Let’s go through them one by one, and see what can be done to fix them.

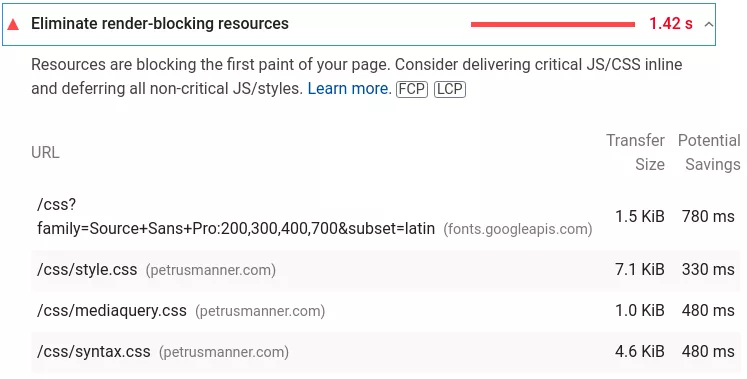

Eliminate render-blocking resources

Google-fonts request is optimized well for size, as it only fetches certain weights and character sets, but the time it takes to complete could be improved. As for the CSS, they could be inlined to the main HTML-file, saving time and eliminating a few extra requests.

One way to prevent CSS from render blocking would be to use asynchronous loading with rel="preload". I considered this approach, but turns out it does not work with Firefox, which makes this strategy a no-go.

There is also a hack to make this work described here, which involves using the media type attribute available to link tags:

<link rel="stylesheet" type="text/css" href="[long url]" media="print" onload="this.media='all'">

Essentially this works by setting the link tag’s media attribute to print, which means that it should only be applied when user is using print-based media, i.e. printing out the page.

This turns the request asynchronous, which upon completion will turn the onload attribute to all, applying the CSS to the page.

This is a cool trick, but ultimately I decided that I don’t care, did nothing, and moved on. Google Fonts-requests however were eliminated by using sans-serif page wide.

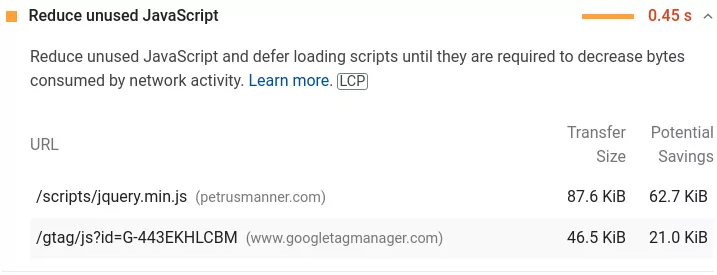

Reduce unused JavaScript

At the time of writing, this blog only has about ~40 lines of Javascript. Re-writing these with vanilla JS (instead of jQuery) should be trivial, and frankly something I should have done to begin with.

Additionally, the script itself can be inlined, eliminating an extra request.

I threw out jQuery, and wrote the scripts again with vanilla JS. I also inlined the script, and placed it in the footer.

// old jQuery function

$('#menu-button').click(function(){

toggleMenuIcon();

$('#menu').toggleClass('showMenu');

});

// new vanilla js version

const menuButtonClicked = function() {

toggleMenuIcon();

x = document.getElementById('menu');

x.classList.toggle('showMenu')

}

After this I promptly decided to eliminate Javascript alltogether, so feel free to browse this blog with Lynx.

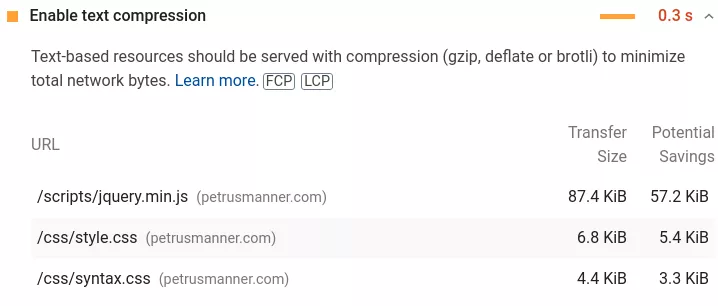

Enable text compression

I honestly thought i already had file compression enabled in Nginx, but apparently this is not the case:

The following gzip directives were added to Nginx. Files will now be compressed before transit, reducing file sizes.

gzip on;

gzip_static on;

gzip_types text/plain text/css text/javascript;

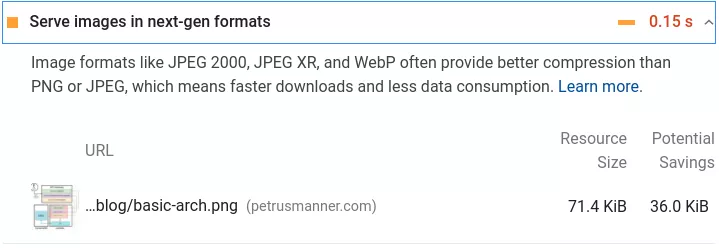

Serve images in next-gen formats

There is an apt-package for a webp CLI tool, so i used that in conjunction with this script i found to mass-convert all of my png files to webp:

#!/bin/bash

# converting JPEG images

find $1 -type f -and \( -iname "*.jpg" -o -iname "*.jpeg" \) \

-exec bash -c '

webp_path=$(sed 's/\.[^.]*$/.webp/' <<< "$0");

if [ ! -f "$webp_path" ]; then

cwebp -quiet -q 90 "$0" -o "$webp_path";

fi;' {} \;

# converting PNG images

find $1 -type f -and -iname "*.png" \

-exec bash -c '

webp_path=$(sed 's/\.[^.]*$/.webp/' <<< "$0");

if [ ! -f "$webp_path" ]; then

cwebp -quiet -lossless "$0" -o "$webp_path";

fi;' {} \;

This works, but I once again decided I don’t care and just kept rocking PNGs.

Speed gains and conclusions

Optimizations are done, time to compare the performance numbers:

Desktop

| Category | old | new | increase |

|---|---|---|---|

| Page size | 0.05 MB (50.6 KB) | 0.02 MB (28.1 KB) | 60% (22.5 KB) |

| Total requests | 21 | 12 | 42% (9) |

| FCP | 0.7s | 0.3s | 57% (0.4s) |

Mobile

| Category | old | new | increase |

|---|---|---|---|

| Page size | 0.05 MB (50.6 KB) | 0.02 MB (28.3 KB) | 60% (22.3 KB) |

| Total requests | 21 | 12 | 42% (9) |

| FCP | 2.3s | 1.0s | 56% (1.3s) |

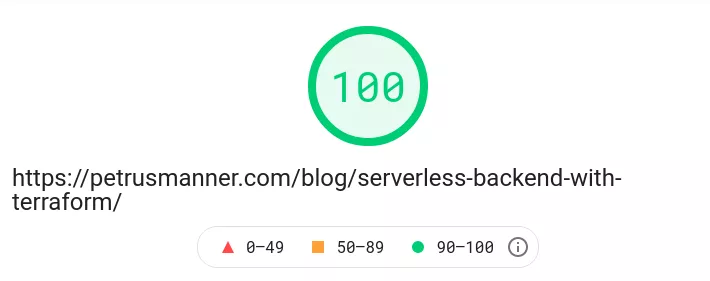

After these optimizations, Google pagespeed now gives me a perfect score for speed: